The widespread adoption of AI carries the risk of content homogenization due to the standardization of outputs. However, like any tool, AI can yield unique and spectacular results when used correctly and creatively. This is the perspective of Riccardo Franco-Loiri, a.k.a. Akasha—a Turin-based visual artist and performer who believes that «art and science have always been tools for exploring the world.» His work spans architectural video mapping, immersive performances, and audiovisual installations. Since 2018, he has specialized in visual design for musical and theatrical shows alongside his studio, High Files, which he founded. His notable collaborations include stage productions for Subsonica, Lazza, Capo Plaza, and Angelina Mango.

There is an ongoing debate about the use of new technologies in the arts—from autotune in music to generative AI in visual fields. How has the concept of creativity evolved, and why is AI a powerful tool for those who embrace it?

«The industrial-revolution-like hype surrounding the latest wave of AI advancements—LLMs like GPT-4 and diffusion models for images—is the result of an ultra-accelerationist mindset that no longer questions the direction taken but only the speed at which discoveries emerge. As an enthusiast and critic of numerous AI-based models, I am immersed in endless innovations. Following the latest updates on a GitHub page or testing the beta version of an unreleased model seems to dictate aesthetic trends. This presents a significant issue. The standardization of output is a tangible risk for generative AI. I believe it stems from multiple factors: the ability to create complex animations is now accessible to everyone, removing the technical knowledge barrier and reducing creation to merely writing an effective prompt; the flattening of styles into dominant trends circulated via social media; and the inherent biases present in AI systems.

By ‘bias,’ we refer to how AI algorithms produce distorted results due to imbalanced training data or specific design choices. To give a concrete example: a year ago, if I had asked a model to generate an image of a ‘beautiful person,’ it likely would have produced a portrait of a young, white woman conforming to Western beauty standards. Conversely, requesting an image of an ‘ugly person’ would often yield an elderly, mixed-race man. This raises social concerns but also influences creativity itself—the datasets that train these systems reflect particular cultures, values, and perspectives, effectively imposing a filter on image generation. Yet, extraordinary ideas can emerge within these biases and AI’s uncanny valley. The creative potential of artificial intelligence fascinated me from the first moment I experimented with a GAN-based system seven years ago. Seeing ‘real’ faces materialize on screen, complete with all the characteristics of a human being, simply by typing the word ‘human,’ left me speechless. These figures seemed authentic precisely because of their imperfections: a slightly hooked nose, one eye half-closed, a chipped tooth, and a wrinkle on the forehead. These are details that society often overlooks, yet I found them even more captivating when artificially generated.

Today, AI models have advanced tremendously, but they have also sacrificed some of their ‘realistic’ qualities to favor a hyperrealistic aesthetic—where every flaw is erased and features are exaggerated. However, within the gray area of bias, there is room for errors—misinterpretations or unexpected overlaps—and something genuinely innovative can emerge. Another key area of my research in AI is hybridization. Early GAN experiments revolved around ‘breeding’ images—each figure was associated with specific keywords, allowing for mixing images and metadata to create a genealogical tree. This remains one of my primary AI research fields today: exploring the possibility of merging seemingly distant worlds to form new visual and conceptual connections, surpassing physical and computational limitations. I will explore these topics in my talk By Trial and Error, part of Lumen’s event lineup on April 10, from 2:30 to 3:00 PM.»

Your most ‘pop’ projects include visuals for concerts by artists such as Subsonica, Angelina Mango, and Lazza. How do these productions come to life?

«These projects are born among friends, at the desks of a creative lab in Northern Turin that I share with my studio High Files and the association Sintetica, of which we are all part. High Files is my creative hub and a second family: together with Tommaso Rinaldi, with whom I co-founded the project in 2018, we have navigated every challenge through data streams, pixels, blinding lights, and overheated computers.

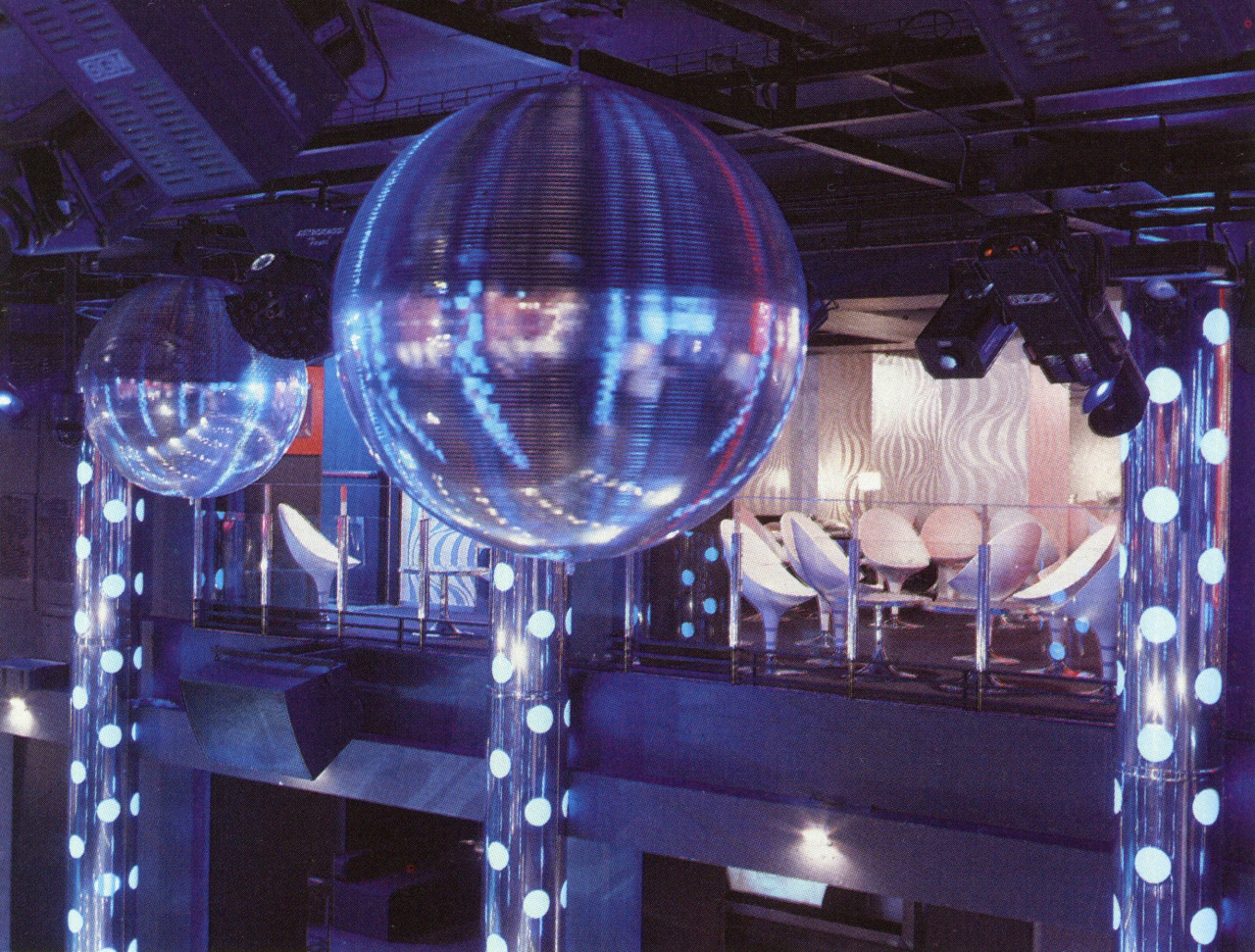

We have always connected deeply with music, collaborating with bands, artists, and festivals worldwide. In recent years, we have focused on large-scale tour productions, enthusiastically shifting from one genre to another. Although it is a constantly evolving environment, we love working with stages, music, and the teams that form around these productions. In particular, we have collaborated intensely with Italian lighting design studios such as Blearred and Studio Neuma.

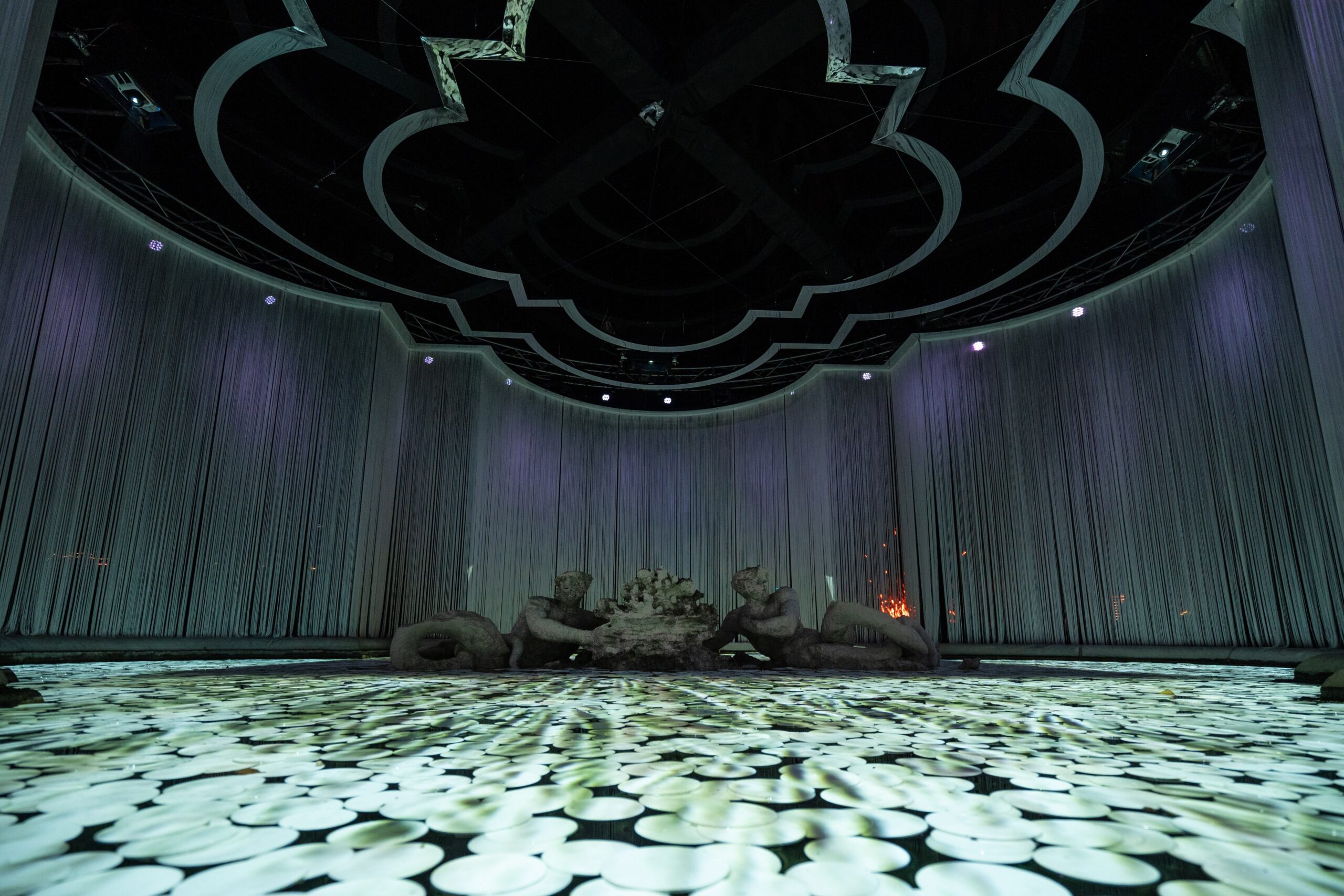

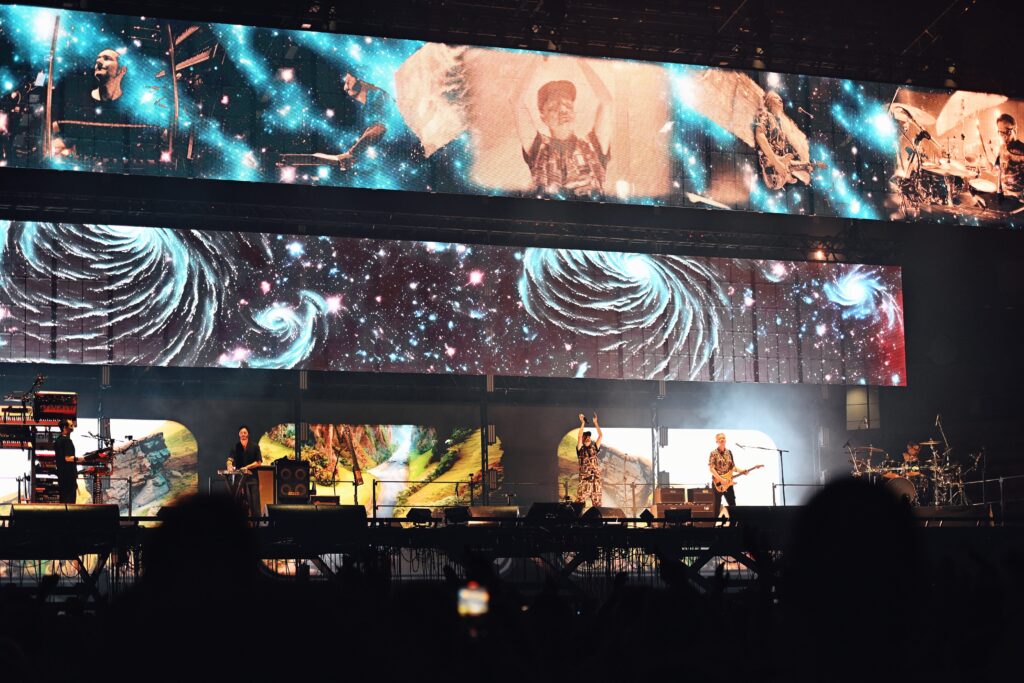

With them, we have created some of the most prominent shows in Italy over the past two years, always striving to bring experimentation and technological innovation. One of the most exciting aspects of these collaborations is the ability to cross-pollinate ideas in the creative process, resulting in fresh and never-predictable outcomes. While the artist is the show’s focal point, the stage plays an increasingly central role today—not just as a visual support but as an actual narrative universe. Lazza’s show, designed by Blearred, perfectly reflected his aesthetic, and we were given total creative freedom after an initial discussion with the team—something far from guaranteed, considering the many figures involved. We first met the Blearred team during Subsonica’s Realtà Aumentata tour in 2024. From the start, we felt a strong synergy. We took bold creative risks, building a dynamic show based on the continuous movement of three 20-meter-wide transparent LED walls and five independently lifting platforms that elevated the band members. The visual language was tightly woven into these movements, generating optical illusions, revelations, and focal points. Being deeply connected to the band, Tommaso and I wanted to reinterpret their concepts in a contemporary key, delivering a vision that remained faithful to their artistic identity. The result made us incredibly proud, especially considering how much we owe to a group that has influenced us deeply.

When we develop a tour production, we ensure that the show forms a cohesive narrative with a distinctive visual identity for each project. Artists must feel represented and enhanced by the images accompanying them. Our work is based on the dynamic nature of visuals: we use screens as vibrant, pulsating light sources, favor rhythmic and incisive editing, and strive to extract as much sonic information as possible from each track, adding layers of reactivity and dynamism. We constantly experiment with various software and techniques, from 3D to real-time algorithmic generation, from AI to traditional motion graphics, creating a specific methodology for each performance. This approach keeps our studio an inspiring space full of creative exchange and research, where every project is an opportunity to test new solutions and develop increasingly personal methods.»

You also created a generative AI video—the film accompanying Liberato’s song Turnà. How was it conceived, and how was AI used to achieve results impossible with traditional techniques?

«In November, Gabriele Ottino from SPIME.IM and I were contacted by Francesco Lettieri, the director and visionary behind Liberato’s project, who proposed we create the music video for the lead single from the artist’s comeback album. Francesco had seen Hint, a video Gabriele and I produced for SPIME. IM’s latest album is Grey Line. Hint was our first collaboration—a dystopian, single-take hyper-trip alternating between ruined landscapes and grotesque millionaire mansions, imagining a future where social divides had grown ever more expansive. We extensively experimented with AI to create it, developing a personal workflow.

After that video, Gabriele and I deepened our AI research and worked on various stage and video productions, including shows for Cosmo, Nayt, Baby Gang, and Subsonica’s Universo video. Francesco essentially asked us to recreate a similar experience for Turnà. Around that time, Gabriele and I were invited to participate in the alpha test of Sora, OpenAI’s new video synthesis model. We proposed using it for the project, and after securing approval from OpenAI’s team, we immersed ourselves in the work, experimenting with an unexplored tool that produced both astonishing and unpredictable results.

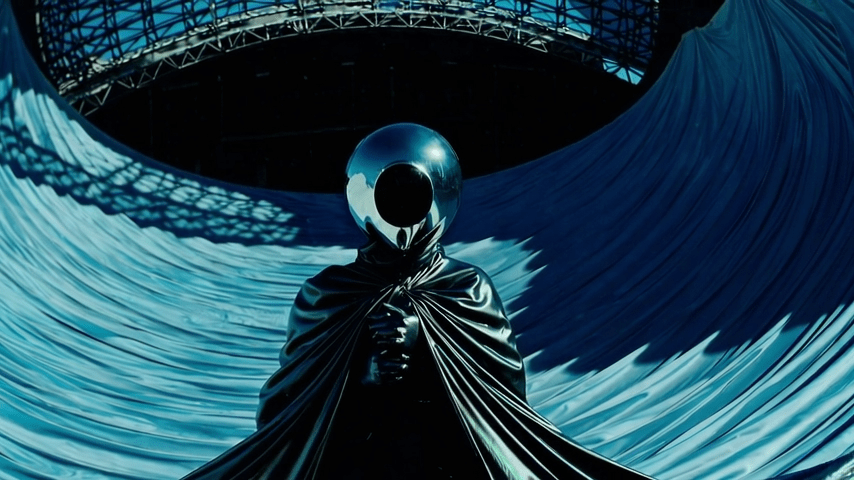

We embarked on a complex yet incredibly stimulating endeavor: blending actual footage, shot in Naples with a team of operators, with AI-generated sequences, creating a seamless visual flow in a long, continuous take. The video is an homage to Naples, its culture, and its intrinsic madness—masks and symbols from the Neapolitan smorfia intertwine with the alleyways of the Quartieri Spagnoli, revealing hidden treasures as we soar over the Maradona Stadium, accompanied by a mysterious hooded figure.

Some of the most compelling sequences emerged from errors, from recursively repeated processes that led to unexpected outcomes. We manipulated prompts in a cut-up approach reminiscent of Burroughs, using the storyboard function to mix generated images, textual descriptions, and video cuts. These experiments generated fragments we reprocessed, engaging in an almost alchemical exploration of the medium’s limits. The goal was to create a kaleidoscopic, multifaceted vision of Naples—a journey that, in just three minutes, could capture the city’s magnetic energy and layered essence as perceived by us as’ outsiders.’ The video was released on January 1, positioning itself as the first European music video generated with Sora’s technology. Witnessing the enthusiastic reaction from the public and Liberato’s entire team was the best way to start the year, and we hope to continue in this direction.»